How LLMs Use the Model Context Protocol

2024-05-27 • 7 min read

From Prediction to Action: How LLMs Use the Model Context Protocol

Large Language Models (LLMs) have taken the world by storm — generating human-like responses, solving problems, and acting as powerful assistants. But despite their capabilities, there’s a hard truth:

LLMs alone are not enough.

They can understand language, but not context. They can predict text, but not take action. They need something more — a way to connect to tools, services, and real-world execution layers. That’s where the Model Context Protocol (MCP) comes in.

This blog is my own exploration of how LLMs transition from isolated text predictors to intelligent agents capable of real-world impact through MCP.

Why LLMs Need More Than Text Generation

Imagine asking an LLM to:

- Book a calendar event

- Retrieve the latest stock prices

- Summarize a PDF stored in your drive

On its own, it can’t. It doesn’t have access to tools, memory, or APIs. That’s why tool integration is the natural next step.

But simply bolting tools onto an LLM creates messy, hard-to-maintain systems. The solution? A structured, scalable layer: MCP.

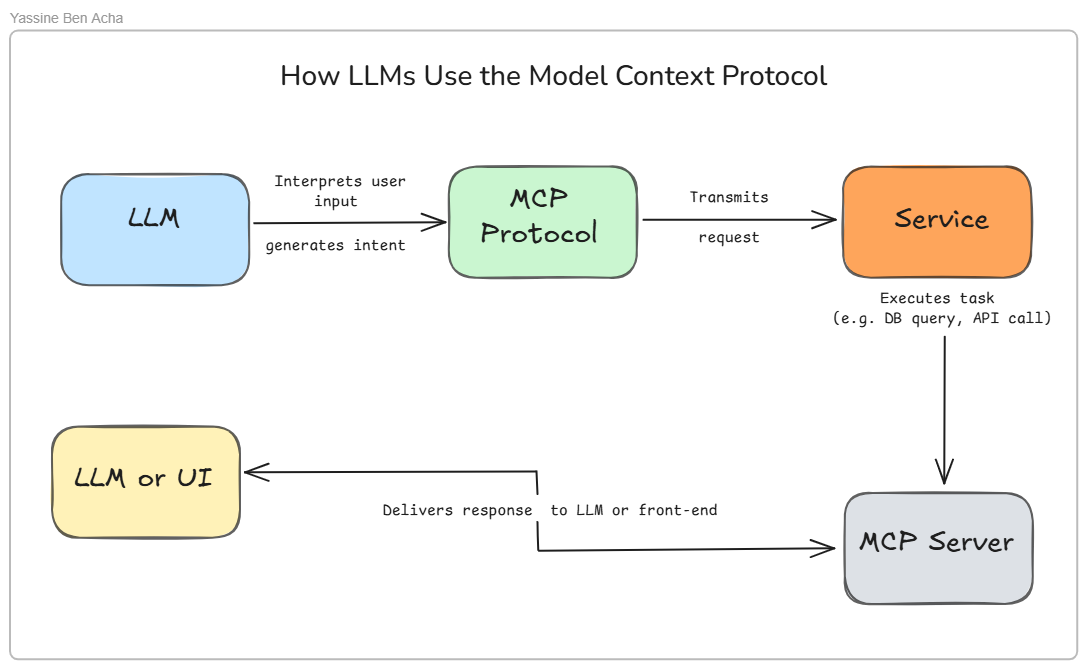

My Visual Take on the MCP Workflow

Here’s how I personally understand and illustrated the MCP flow:

- LLM → Listens to the user, interprets intent

- MCP Protocol → Translates that intent into a standardized request

- Service → Executes the action (API call, DB query, etc.)

- MCP Server → Gathers the response

- LLM or UI → Presents the result or continues reasoning

This model helps LLMs act as real agents, able to think, decide, and act across systems.

Why This Matters (To Me)

Working on AI projects, I often ran into the same pain:

- LLMs are brilliant at language but helpless without structure

- Tool usage was clunky and specific to each implementation

MCP changes the game. It gives us a common protocol, clean separation of concerns, and a way to build modular AI systems.

As someone who’s building and experimenting with LLM-based workflows, this design pattern feels essential.

Tools I Used in My Own MCP Testing

| Component | What I Used |

|---|---|

| LLM | GPT-4 (OpenAI) |

| MCP Protocol | LangChain, CrewAI (conceptual layer) |

| Service | Python APIs, FAISS, SQL, Weather API |

| Server | FastAPI |

| Frontend/UI | Streamlit |

Final Thoughts

Building autonomous AI agents is no longer science fiction. But doing it well means embracing architectures like MCP.

This blog — and the diagram I created — is my personal effort to understand this shift — from LLMs as passive predictors to LLMs as context-aware actors.

Hope it helps you, too. And if you’re working on something similar — I’d love to connect.

🔗 Connect with me on LinkedIn